Problem

I was working towards a problem in managing a massive inventory for an online marketplace with millions of product listings from various sellers. Basically the system requires efficient storage, fast retrieval and scalability. The system should handle frequent updates (price changes and stock availability), also it must provide real time search capabilities for customers, and support diverse filtering options (eg: category price range, seller).

Additionally, it needs to accommodate varying data structure across sellers and we need a solution for both storing and querying. Scalability, durability and performance are critical to ensure smooth operations as the market place grows.

Major problem to solve is - the product listings, each varying in size and structure. Sellers frequently update product attributes like price and stock and product Images, products have to be stored and retrieved securely.

Now,

We have to store the Product listings (json) and images effectively in the backend from sellers.

We have to support the sub-second query responses to all the search queries from consumers.

Support the data growth over time as the business scales.

Ensure fast retrievals for search-heavy use cases like product filtering.

Handling data lifecycle, especially for orders and historical data.

NFR

Each report is sized between 100KB to 800 MB and it differs from sellers to sellers.

Rough example: 0.5MB per document × 500 documents per user per month, equalling up to 1.5GB per seller.

Solution Options

Relational Databases with JSON Support:

I started with PostgreSQL

It supports structured and JSON storage through its JSONB data type and It is a good choice for managing metadata and structured queries with transactional consistency.

But (as per NFR) storing large JSON documents (100 KB to 800 KB) entirely in postgresql, can result in degraded query performance as the data size grows and will lack the speed for real-time querying of large json objects.

But we have another option to consider here, say if your search criteria is limited to certain fields from the JSON you can store the frequently queries fields in the database and offload the entire document elsewhere.

NoSQL Databases

Next, i looked into MongoDB

It supports unstructured and semi-structured data efficiently. It excels at storing large json documents and offers flexible schemas, making it ideal for scenarios where JSON data varies significantly in structure.

But there’s a catch, it has row size limitations (16 MB per document by default) which can restrict its use for extremely large JSON files. It also supports read-heavy workloads and its querying capabilities are not optimized as ElasticSearch when dealing with full-text search requirements.

Object Storage

Object storage, like S3, was another option.

It is cost effective for storing large JSON docs and associated data and even we can write the files straight from memory with a PUT request to S3.

The plan is straightforward

Make a Report column in the Postgres DB or MongoDB with fields: timeStamp, id, partialReport, and relational field to the URL the report belongs to.

Here MongoDB and postgres would support a more compact binary JSON representation (In Postgres, declare the column as jsonb rather than json)

But, We have a problem here: Keeping the database and S3 in sync can be challenging, especially when migrating databases. It is a good idea to ensure that those external documents are immutable and content-addressable (e.g., SHA256 hash).

The tricky part is deleting the external documents when records are removed from the database. In practice, this might require a scheduled clean up process to solve these issues.

Caching

Caching came to mind, But it was’t practical for JSON files.

Why?

Caching the JSON easily saves you from performing expensive operations, but if the files are large only few entries fit in the cache.

Retrieval is not terribly expensive to retrieve (no complicated queries with scans or joins involved).

Keep in mind it is memory-limited and it is infeasible to keep all your data in this cache.

Elasticsearch

Finally, I considered Elasticsearch for real-time search and filtering.

It excels in distributed real-time, text-based search operations. It would be a good choice for scenarios where json documents need to be searched and filtered frequently.

But catch here is, though it excels in search performance, it is not designed as a primary storage solution for large json documents due to its cost and resource intensity for storage.

FinalSolution Recommendation

Architecture is certainly trade offs based on the requirements, here I took a hybrid approach.

After weighing the options, I took a hybrid architecture for goal

Handle small to read heavy operations with complex searches.

Storing of large JSON + images.

Support both structured queries and full-text search.

Balance Cost vs Performance optimization.

I have two approaches

Small to medium (up to 1m document, moderate search load)

From my personal experience postgresql can handle over 1M and i personally managed 3 million rows smoothly. However, performance depends on data model + indexing strategy and queries and your architecture and of course your hardware.

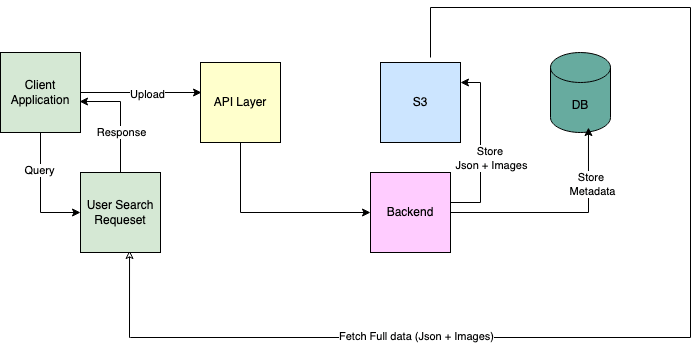

In this simple architecture when a client uploads data (like a product listing with images), typically it handles two simultaneous operations:

Storing metadata and indexes in PostgreSQL/MongoDB, and saving the full JSON data and images in S3.

When users search for something, their request hits the API layer, which then queries the database using its indexes for quick searches. If the search needs the complete information, the API fetches the full data from S3.

Large Scale (more than 5M to 50 million) heavy search load

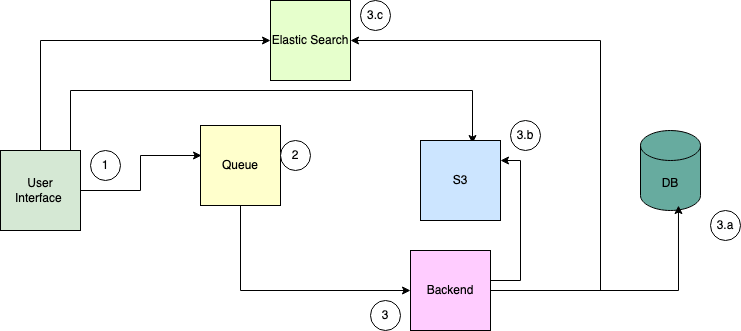

In the large-scale approach, we have to handle millions of documents, we would need a distributed approach.

Recommended approach is to push the data to Queueing (kafka) and ensure data gets distributed to three destinations.

Ingest product metadata into elasticsearch and index only search specific data (frequent queries)

Store Full product details (metadata) in to postgresql/mongodb

Move the complete json + images to S3

Metadata will have files linked via URLS stored in Elasticsearch/Postgresql database.

Let us visualize the flow. When user search, the system uses to paths:

Simple searches (like “find by ID”) can go directly to PostgreSQL/MongoDB. Complex searches (like "find all red shirts under $50 with good ratings") go to elastic search.

Both paths can fetch the complete data from S3 when needed where the complete json and images will be stored.

Note 1:

While this solution optimizes querying speed and storage costs, it introduces some complexity in managing multiple systems. Synchronizing data between Elasticsearch, MongoDB/Postgresql and S3 requires additional workflows, such as using a message queue like Kafka to ensure updates are processed consistently.

Note 2:

In implementation perspective, Asynchronous load is essential. By implementing lazy loading, product metadata can be fetched from elastic search first, while large files, such as images, are retrieved asynchronously from s3. Also, Caching frequently accessed files or images using a caching layer like Redis or a CDN significantly reduces retrieval times from S3, ensuring faster responses for end-users.

Conclusion

If the project is going to start new and small data then I would recommend start simple.

Basically do a cost estimation, benchmarking for technology you are choosing

Take into account that a split solution will take more development effort and specifically we have to choose based on the NFR.

From my recommendation, consider starting with a Postgres-only approach, and keeping everything in one DB is going to be simpler. Once the storage overhead becomes problematic, you can migrate the JSON documents into a different storage solution and replace the JSON column with a column of URLs.

This applies for only storage of JSON files.

Elastic search or similar tools will come into picture If you have external access, then i would recommend to decide based on your access patterns and costs, introducing a cache may be useful – but that's not required for an initial working solution.